Structural Nested Mean Models for Causal Inference

Oisín Fitzgerald, January 2021

Introduction

What problem are we solving?

Causal inference considers questions such as: what would be the impact of giving someone a particular drug? There is a sense of action in a causal question, we are going to do something (hence Pearl's do notation (Pearl, 2009)). An associational question on the other hand, would ask: what is the most likely outcome of someone who is receiving a particular drug? It is passive (but still useful - e.g. predictive modelling). It is generally not the case that the answers to causal and associational questions will be the same, they can even lead to seemingly conflicting results. Most medical research asks a causal question; we wish to inform decisions. It is important we use the appropriate causal methods and thinking to answer the causal, rather than associational question (see Hernan and Robins (2020) for an in-depth treatment of this issue). There are many important considerations in answering causal questions from whether the question is worth answering, to what data needs to be collected (see Ahern (2018) for a good outline). This post considers arguably the least important aspect - the statistical methodology used to estimate the causal model parameters. I'll outline (as I poorly understand it) G-estimation of structural nested mean models (SNMM) for a single timepoint and two treatment options, and show some simulations using R along the way. First a brief review of the potential outcomes framework, those familiar with it can skip to the next section.

Potential outcomes framework

Within the potential outcomes framework causal inference becomes a missing data problem. For the case of two treatment levels there are two potential outcomes and . If someone gets then the observed outcome is (referred to as consistency), and is the counterfactual. That we only observe one potential outcome per person at a given time is referred to as the fundamental problem of causal inference (Holland, 1986). Our focus in this article will be calculating the conditional average treatment effect (CATE) where is a vector of effect modifiers.

To answer causal questions in a given dataset we require knowledge (or must make assumptions about) about the data generating process - in particular aspects of the treatment decision process (why did the clinical give patient A a particular drug?) and the outcome process (what is it about patient A that increases their risk of a particular outcome?). This knowledge allows us assess whether we can assume conditional exchangeability (unconfoundedness)

that within strata of we can consider treatment to have been randomly assigned, and positivity, that there exists some possibility that each patient could have received either treatment

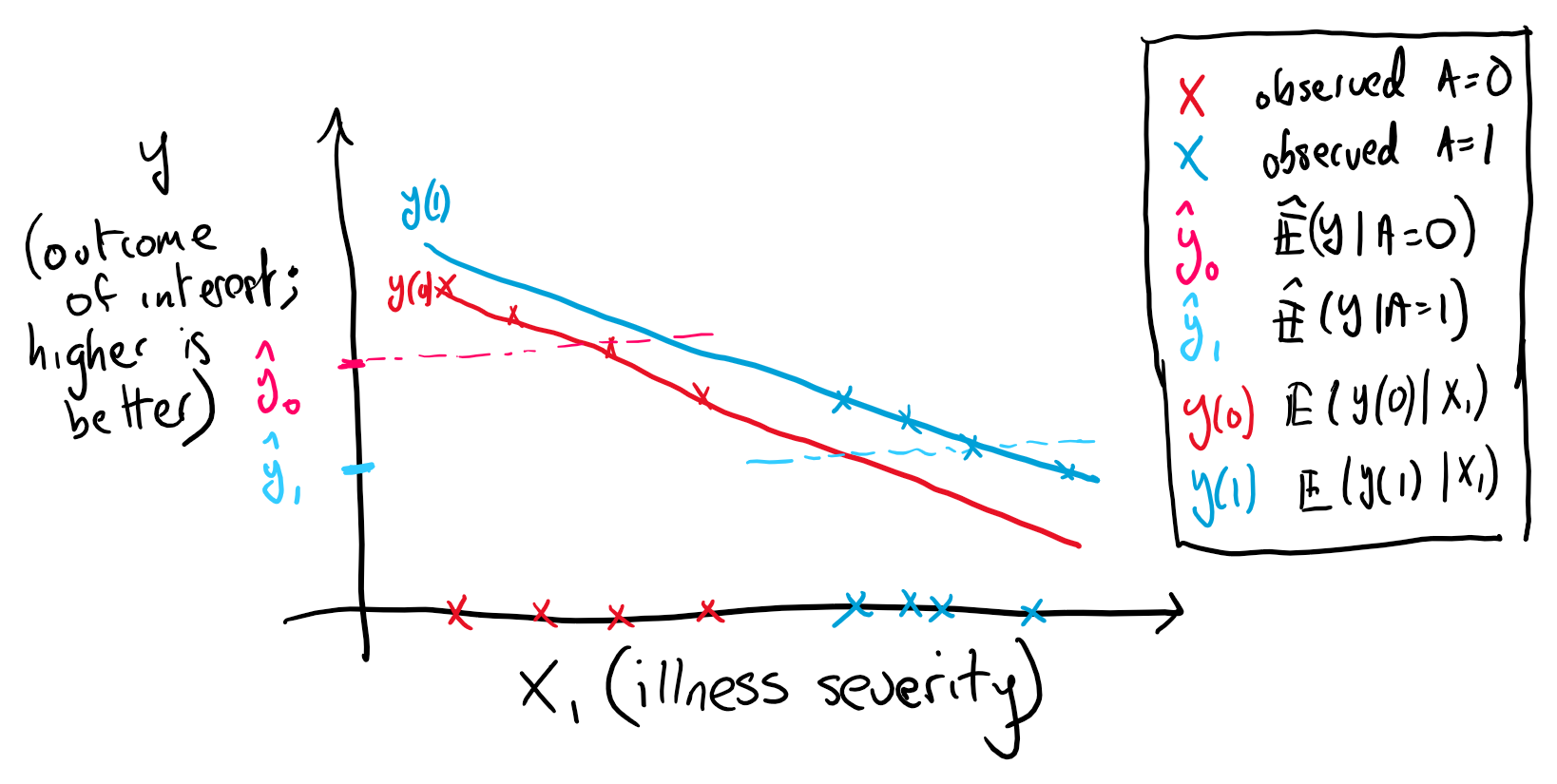

Conditional exchangeability will be key to constructing our estimation process and clarifies the difference between causal and associational analysis. To see this consider the canonical confounding example where sicker patients are more likely to get treated with a particular medicine (figure 1). A naive estimate of the difference may lead to the impression that treatment is harmful. However, if the treatment decision has been based on an illness severity factor, denoted by the random variable , that combines all factors predictive of the disease outcome then we have a biased result

In our example, only by accounting for can we get an unconfounded case where conditional exhangeability holds and the previous inequality becomes an equality.

Figure 1. Illustration of confounding. The more severely ill (high X1) are more likely to get treated leading to the situation where the average outcome is worse in the treated. Notice that positivity is violated in this illustration.

In summary, we will need to make certain assumptions (1) consistency (2) unconfoundedness and (3) positivity around the data generating process (often reasonable but unverifiable) in order to be able to calculate an unbiased treatment difference. For an in-depth treatment see Hernan and Robins (2020).

G-methods Family

Not all statistical approaches that work for treatment comparison at a single timepoint generalise to situations involving time-varying treatments (where there is treatment confounder feedback). The G (generalised) methods, developed by James Robins and colleagues - including structural nested mean models (SNMMs), marginal structural models and G-computation, apply in both single and multi-stage treatment effect estimation (see references). If we want to compare the effectiveness of two treatment regimes or policies (e.g. intensive versus standard blood pressure control) using an observational sources such as electronic medical records the G-methods are an obvious choice. Here, we describe SNMMs and G-estimation in the context of a single treatment where there are a large number of competing approaches.

Structural Nested Mean Models

Structural nested mean models (SNMMs) are models for the contrast (mean difference) of two treatment regimes. This difference can be conditional on a set of effect modifying covariates. The term 'structural' indicates that they are causal models, and in a longitudinal setting the model takes a 'nested' form. Assume we observe a dataset where is a random variable indicating a patient history (covariates; e.g. blood pressure), is a realisation of for individual , is the outcome of interest and is the treatment indicator. In the single timepoint setting SNMMs involves fitting a model for the CATE

where is the potential outcome under treatment and the variables are effect modifiers. In particular we will discuss linear single timepoint SNMMs, where indicates a individual patients treatment history and a possible transformation of the original data (e.g. spline) resulting in a vector of length

Within medicine it is generally considered reasonable to assume the effect modifying variables are generally a subset of the history , with the dimension of , possible far smaller than . While a large numbers of factors - genetic, environmental and lifestyle - may influence whether someone develops a particular disease their impact on the effectiveness of a particular treatment may be neglible or noisy (effect modification is a higher order effect) in finite sample. Nevertheless, for simplicity of notation we will assume from hereon.

There are several reasons we might be interested in only estimating the treatment contrast versus the outcome model under each treatment directly. One way to think about the observed outcome is as being composed of two components, a prognostic component and a predictive component. The prognostic component determines an individuals likely outcome given a particular medical history, and the predictive component determines the impact of a particular treatment. We can separate the expected outcome for a patient into these components

where the setting in corresponds to a control or baseline case. In many cases may be a more complex function than (Hahn, Murray & Carvalho, 2020). The potential for misspecification of or desire for more parsimonious model motivates attempting to directly model . If the final model will be used in practise, and must be explainable to subject matter experts, since empirically may be simpler there may be large improvements in interpretability if we model rather than an alternative. The parameter set of is estimated using G-estimation, which we turn to next.

A Review: Independence and Covariance

G-estimation builds upon some basic some facts about conditional independence and covariances which we briefly review. The conditional independence of and given is denoted as . For probability densities this translates to . A direct result of this is that the conditional covariance of and given is equal to zero.

Where the third line follows from the ability to factorise the conditional densities . We also note that 1) this holds if we replace or by a function of or and , for example and 2) relatedly that .

G-Estimation

G-estimation is an approach to determining the parameters of a SNMM. As we are modelling a treatment contrast in a situation where only one treatment is observed per individual we need a method that accounts for this missingness. There are two explanations below, the second is more general, with some repitition.

Explanation 1: Additive Rank Preservation

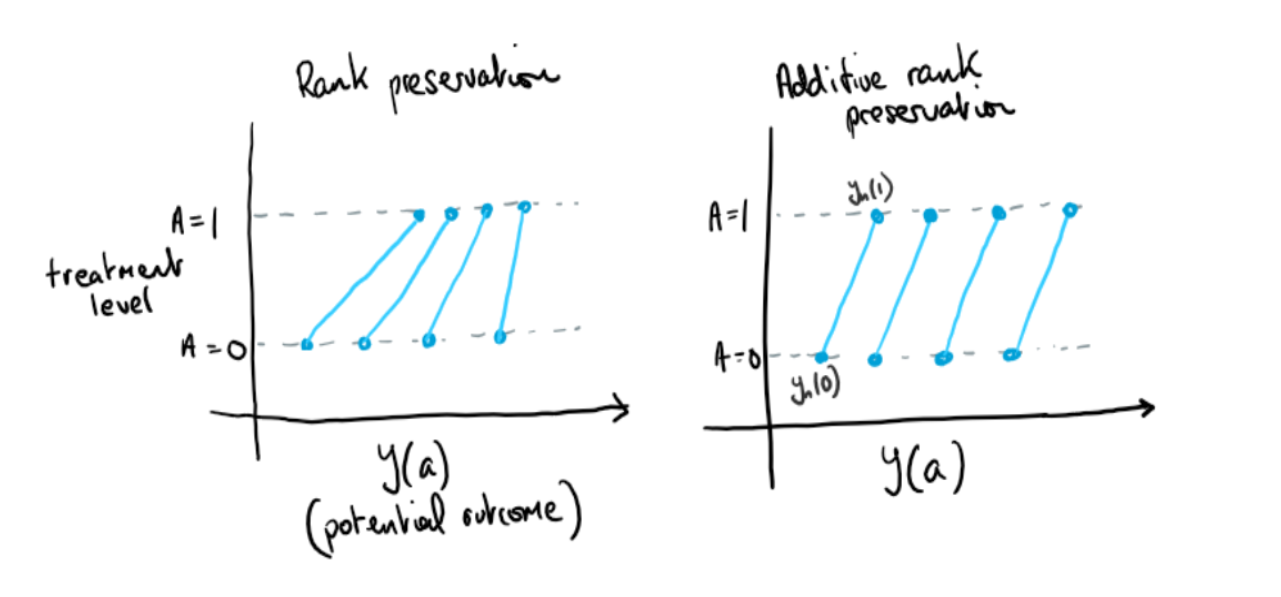

One approach to explaining G-estimation is through assuming additive rank preservation with regard to the treatment effect (Hernan & Robins, 2020). Additive rank preservation is the assumption that the treatment effect is the same for everyone, that . We emphasise that this is at the individual level (see figure 2). As shown later it is not a requirement for G-estimation that this assumption holds, it is expository tool.

Figure 2. Illustration of additive and nonadditive rank preservation

Notice that for the case of additive rank preservation with no effect modification the following holds

If we call this final expression then utilising the assumption of unconfoundedness this should be uncorrelated with any function of the treatment assignment mechanism, conditional on the confounders . For this case of no effect modification we'll let (we'll return to choice of later). We then have the estimating equation . For the cases where is unknown we replace it with an estimate. This equation can then be solved for the unknown , giving us an approach towards estimation. We continues this case below in Example 1.

Explanation 2: More General Case

Now consider the more general case where is a vector of parameters of our SNMM . Our analog of is now equal to in expectation

Where we make use of the consistency assumption in going from line two to three. As a result we have the following estimating equation for the general case in a single stage setting which is zero in expectation . Note that we could mean center which we will return to. For the case where can be expressed as a linear model this can be explicity solved, we outline this case below in Example 2.

Some examples

Example 1

In the case of (the average treatment effect (ATE)), i.e. and we have an explicit solution

As mentioned, in observational studies where the treatment assignment mechanism is unknown we replace (the propensity score) with an estimate, using e.g. logistic regression or more complex models.

Let's simulating this situation for a data generating model of the form

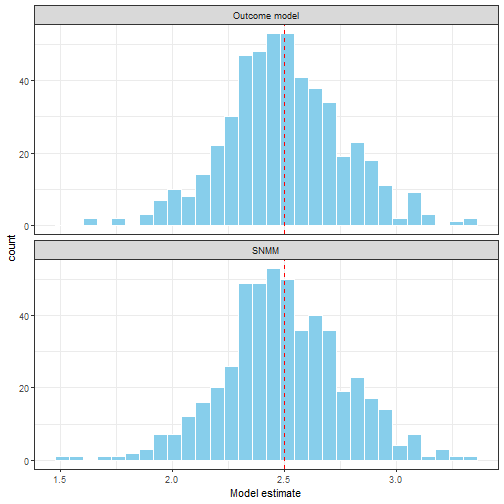

Notice observations with larger values of are more likely to be treated. We'll compare fitting a SNMM for the parameter with fitting an linear model for the full conditional expectation. The simulation set up is adapted from Chakraborty and Moodie (2013). As shown in figure 3 both methods return similar results. This is to be expected; the advantage of SNMMs and G-estimation is primarily in situations where the prognostic component is complex and we want a parsimonious model (we show this case below), and in time-varying treatment setting (in part 2).

## SIMULATION 1

M <- 500 # number of rins

tauM <- replicate(M, {

N <- 100

# generate data

h1 <- runif(N,-0.5,3)

ps <- function(x) 1/(1 + exp(2 - 1.8*h1))

a <- 1*(ps(h1) < runif(N))

y <- -1.4 + 0.8*h1 + 2.5*a + rnorm(N)

# estimate probability of treatment

pm <- glm(a ~ h1,family = binomial())

ph <- fitted(pm)

w <- (a-ph)

# estimate treatment effect

tauh_g <- sum(w*y)/sum(a*w)

tauh_lm <- lm(y ~ h1 + a)$coef["a"]

c(tauh_g,tauh_lm)

})

Figure 3. A comparison of OLS estimation of the outcome model E(Y|A,H) and G-estimation of the SNMM

Example 2

Now consider the case where is a function of several variables. Our decision rule for which treatment to use must consider several variables. We can model this using a linear model with covariates . The resulting empirical estimating equation is

Here we have replaced with which changes nothing as is treated as a constant. As stated this appears to be a single equation with unknowns. However, as is an 'arbitrary' function we can convert it into equations through choosing to be the vector valued function . This gives estimating equations

In order to write the above estimating equations in matrix/vector form let be our effect modifier/confounder design matrix, and . Then our estimating equations in matrix/vector form are . Solving for gives

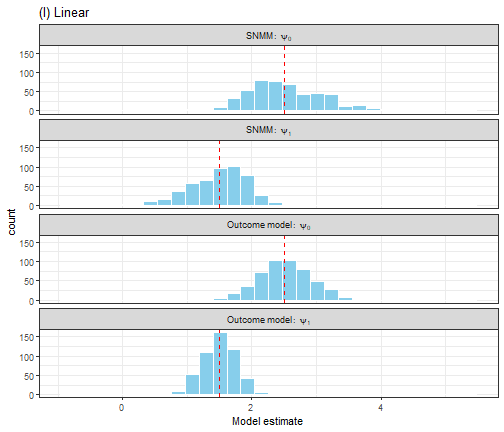

Simulating this situation, again building the settings off Chakraborty and Moodie (2013), we have two settings, with the component (3) alternatively linear and non-linear. The treatment effect component is always linear - we'll come to non-linar next.

We compare G-estimation of the SNMM with a (linear) outcome model for the full expectation . While this shows the strength of SNMM in avoiding misspecification it is not entirely fair, as in an empirical setting a simple plot of the data would reveal that a linear model is a bad idea.

gest_snmm <- function(X,y,a,ph) {

w <- (a-ph)

W <- diag(w)

A <- diag(a)

t1 <- solve(t(X) %*% W %*% A %*% X)

t2 <- t(X) %*% W %*% y

t1 %*% t2

}## SIMULATION 2

M <- 500 # number of runs

tauM <- replicate(M, {

# generate data for linear and non-linear cases

N <- 100

h1 <- runif(N,-0.5,3)

ps <- function(x) 1/(1 + exp(2 - 1.8*h1))

a <- 1*(ps(h1) < runif(N))

psi0 <- 2.5

psi1 <- 1.5

y1 <- -1.4 + 0.8*h1 + psi0*a + psi1*a*h1 + rnorm(N)

y2 <- -1.4*h1^3 + exp(h1) + psi0*a + psi1*a*h1 + rnorm(N)

# estimate probability of treatment

pm <- glm(a ~ h1,family = binomial())

H <- cbind(rep(1,N),h1)

ph <- fitted(pm)

# estimate treatment effect

g1 <- as.vector(gest_snmm(H,y1,a,ph))

g2 <- as.vector(gest_snmm(H,y2,a,ph))

ols1 <- lm(y1 ~ h1 + a + h1*a)$coef[c("a","h1:a")]

ols2 <- lm(y2 ~ h1 + a + h1*a)$coef[c("a","h1:a")]

c(g1,g2,ols1,ols2)

})

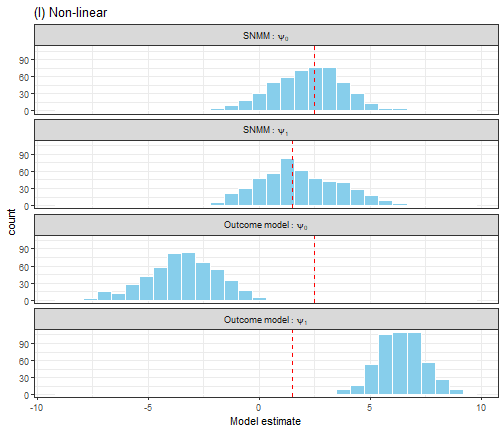

Figure 4. A comparison of OLS estimation of the outcome model E(Y|A,H) and G-estimation of the SNMM for a linear outcome model.

Figure 5. A comparison of OLS estimation of the outcome model E(Y|A,H) and G-estimation of the SNMM for a non-linear outcome model.

More efficient G-estimation

Returning to the idea that setting follows from , it turns out there is a more efficient form of G-estimation if we take full advantage of this equality. As per Wikipedia "a more efficient estimator needs fewer observations than a less efficient one to achieve a given performance".

Above we use an empirical version of as out estimating equation. That is because we don't know . However, we can estimate it; to do this fit a model and use it to predict for all observations. Then rather than using in our estimation procedures we use . The need to estimate several models before G-estimation may seem to add the number of possible sources of error however it actually has the opposite impact. This approach is doubly robust, meaning that if either x or y are correctly specified then the estimated causal effect is unbiased. Correct specification refers to the function form of the model, e.g. aspects such as linear/non-linear effects and interactions. Failure to include confounders would lead to bias even if the model was "correct" for the measured confounders. For our linear model, with m being, the doubly robust estimate is

What is the intuition behind this? We can think of it as information simplification - by taking out we are allowing the estimation to focus on changes with for is informative about what variation is not due to the treatment effect. Generally noise reduction signal enhancement. For a more detailed argument see Athey and Imbens (2019) or Nie and Wager (2017). We can still use our avoid function gest_snmm for efficient G-estimation, but rather than passing in the actual y we pass in which we can estimate using an approach of choice.

Non-linear effect modification

So far we have talked about and simulated linear , but what if the effect modification is non-linear? Restricting ourselves to linear models in the age of machine learning seems so uncool. I'll add that many of the ideas underlying G-estimation and snmm come up in gradient trees (Athey 2019) or R-learning (Nie & Wager 2017). As a first step towards non-linear models for we'll consider approaches that involve transformations of the design matrix (the matrix containing our effect modifiers and generally a vector of 1s for the main (first order) effect of treatment). So we have a transformation , including methods such as the Fourier transform, polynomial expanstions, spline basis matrices. We then replace with in our estimation procedure

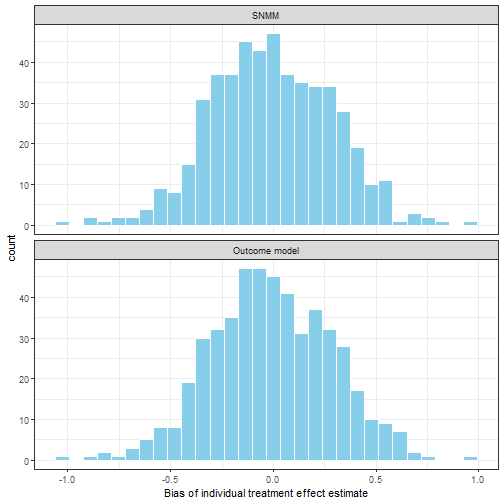

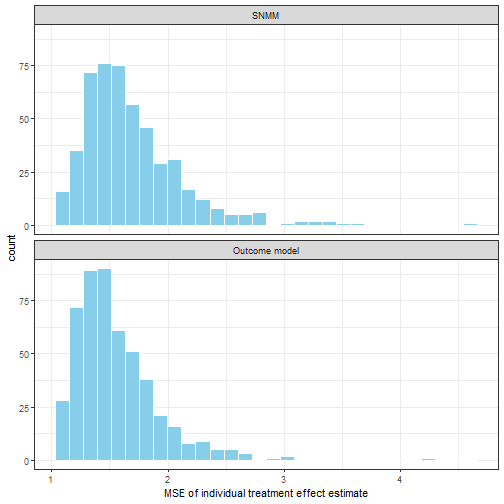

To illustrate this, lets do another simulation. In this case is non-linear.

We have increased the number of variables to two (clearly the simulations should not be relied upon!). For this simulation I've increased the sample size to .

## SIMULATION 3

M <- 500 # number of runs

tauM <- replicate(M, {

# generate data

N <- 1000

x1 <- runif(N,-0.5,3)

x2 <- rnorm(N,2,3)

ps <- function(x1,x2) 1/(1 + exp(2 - 1.8*x1 + 0.2*x2))

a <- 1*(ps(x1,x2) < runif(N))

psi0 <- 2.5

psi1 <- 1.5

tau <- psi0 + 0.7*exp(x1) + 0.5*x2 + 0.4*x2^2 + rnorm(N)

y <- 1.2*x2 - 1.4*x2^2 + 0.8*exp(x1) + a*tau + rnorm(N,sd = 3)

# estimate probability of treatment

pm <- glm(a ~ x1 + x2,family = binomial())

Xs <- cbind(rep(1,N),bs(x1),bs(x2))

ph <- fitted(pm)

# estimate treatment effect

ols <- lm(y ~ bs(x1) + bs(x2) + a + bs(x1)*a + bs(x2)*a)

df0 <- data.frame(x1,x2,a=0)

df1 <- data.frame(x1,x2,a=1)

tau_ols <- predict(ols,df1) - predict(ols,df0)

g <- gest_snmm(Xs,y - predict(ols,df0),a,ph)

tau_g <- as.vector(Xs %*% g)

c(bias_g=mean((tau_g-tau)),

bias_l=(mean((tau_ols-tau))),

mse_g=mean((tau_g-tau)^2),

mse_l=(mean((tau_ols-tau)^2)))

})

Figure 6. Average bias of individual treatment effect

Figure 7. Average meas square error of individual treatment effect

Standard errors and confidence intervals

Quantifying uncertainty in our parameter estimates is important. For G-estimation of SNMMs the non-parametric bootstrap offers a general approach to estimation of standard errors, allowing incorporation of the uncertainty arising from estimating and .

Conclusion and up-next

We've outlined the linear SNMM and G-estimation, focusing on the single stage setting. While SNMM are not often used in practise I hope that as we go through this series their strengths will become clear. We have largely dealt with simplistic situations in which the CATE is of interest to emphasise the fundamentals of the method. G-estimation is based on setting an empirical covariance equal to zero building off the assumptions from causal inference - ignorability/unconfoundedess, consistency and positivity. As mentioned at the beginning there is much more to causal inference than the methods and often subject matter knowledge plays an important role in justifying the reasonableness of these assumptions and designing the data collection/extraction process.

There are several things we haven't covered in this tour of SNMM and G-estimation:

Non-continous outcomes - in particular binary outcomes

More than point estimates - quantiles or distributions

Treatments that vary over time

Simulations that really test SNMM (or it's competitors) in the complex and noisy datasets common in data science practise

We'll come back to these topics, in particular time varying treatments in subsequent posts. Thanks for reading.

Thanks to Oscar Perez Concha for reading and discussing drafts of this.

Reading and links

Ahern, Jennifer. 2018. Start with the ’c-Word,’ Follow the Roadmap for Causal Inference. American Public Health Association.

Athey, Susan, Julie Tibshirani, Stefan Wager, and others. 2019. Generalized Random Forests. The Annals of Statistics 47 (2): 1148–78.

Chakraborty, Bibhas, and Erica EM Moodie. 2013. Statistical Methods for Dynamic Treatment Regimes: Reinforcement Learning, Causal Inference, and Personalized Medicine. Springer.

Hahn, P Richard, Jared S Murray, Carlos M Carvalho, and others. 2020. Bayesian Regression Tree Models for Causal Inference: Regularization, Confounding, and Heterogeneous Effects. Bayesian Analysis.

Hernan, Miguel A, and James M Robins. 2020. Causal Inference: What If?” Boca Raton: Chapman & Hall/CRC

Holland, Paul W. 1986. Statistics and Causal Inference. Journal of the American Statistical Association 81 (396): 945–60.

Nie, Xinkun, and Stefan Wager. 2017. Quasi-Oracle Estimation of Heterogeneous Treatment Effects. arXiv Preprint arXiv:1712.04912.

Pearl, Judea, and others. 2009. Causal Inference in Statistics: An Overview. Statistics Surveys 3: 96–146.

Robins, James. 1986. A New Approach to Causal Inference in Mortality Studies with a Sustained Exposure Period—Application to Control of the Healthy Worker Survivor Effect. Mathematical Modelling 7 (9-12): 1393–1512.

Robins, James M, Donald Blevins, Grant Ritter, and Michael Wulfsohn. 1992. G-Estimation of the Effect of Prophylaxis Therapy for Pneumocystis Carinii Pneumonia on the Survival of Aids Patients. Epidemiology, 319–36.

Robins, James M, Miguel Angel Hernan, and Babette Brumback. 2000. Marginal Structural Models and Causal Inference in Epidemiology. LWW.

Vansteelandt, Stijn, Marshall Joffe, and others. 2014. Structural Nested Models and G-Estimation: The Partially Realized Promise. Statistical Science 29 (4): 707–31.